Our web applications can trust the motives of its users and the information they provide

– No One Ever

Is your web application serving up static data, pages that never change and don’t accept any user input implicitly or explicitly?

If yes, we’re done here. This post isn’t for you.

But, what are the chances of that? Probably zero. This is Web x.0 and most likely you’re serving up dynamic pages, pages that your visitors most likely interact with. Whether their searching for something on your site, logging in, leaving comments, navigating to a specific URL that includes parameters or any number of common activities a user on your site might perform. If that sounds more like activities on your web application that users might participate in, then read on.

In 2014, Cross-site Scripting (XSS) has been identified as the most frequently found vulnerability amongst vulnerabilities tested for in web applications. More concerning is that OWASP has identified it as #3 in their top 10 web application security flaws which is ranked by prevalence and business impact. Like trash attracts flies, the ever evolving user immersion of today’s web applications is a playground for vulnerabilities such as XSS. But why is that and what can we do about preventing XSS in ASP.NET web applications?

Preventing XSS in ASP.NET Made Easy?

If you have spent anytime attempting to wrap your head around XSS, like many, you might have come to the same conclusion of feeling overwhelmed and perplexed. We have made it that way by defining 5 different terms for small variations of ways the vulnerability can be exploited and a plethora of information and ways to implement mitigations. While in reality, it can be much simpler and straight forward.

The key to understanding XSS, lies in the understanding there are only 2 key players involved. Having a basic understanding of about those 2 players and their role will empower you with the ability to identity XSS vulnerabilities in your web application. Furthermore, coupling that information with the tools I provide will help in taking a pro-active approach to protecting against this type of vulnerability.

Those key players are the browser and external sources. Let’s start off by understanding the browser’s role.

Execute All the Things!

For you seasoned web developers, the following is going to be a trivial example. But for the newly indoctrinated or those making a foray into web development, the following is a very high level example of the flow for a client requesting a site such as http://example.com.

I highly recommend reading How Browsers Work for a very technical outline of, you guessed it, how browsers work.

We can take a simple approach and divide all the information the browser receives from the web server into two camps, data and instructions. Therefore, when the browser is executing a JavaScript script for example, we can say it is processing that information within an instruction context. Contrarily, when the browser is displaying plain text, this can be viewed as a data context. However, when our web application receives information that we expect to simply be data and instead contains instructions that is masquerading as data, the browser will execute those instructions. Since, generally speaking browsers do not have any concept of malicious vs. non-malicious.

The key here is “information our browser receives”. Where does that information come from, only the developer? Or, does it contain information provided by an external source? This leads us to the second key player in understanding XSS.

Note: I said earlier that browsers don’t have a concept of malicious vs. non-malicious. However, modern browsers are getting better at providing native mitigations and implement a number of constraints and rules that help mitigate a number of vulnerabilities, some of which are categorized as XSS. Unfortunately, these constraints and rules vary from browser to browser and relying on the protection of one browser might leave you vulnerable in another. They should always be viewed as icing on the cake.

External Source

While the browsers execution of instructions might be the catalyst, the cause for a XSS exploit comes from external sources.

One of the many ingredients that go into a web application providing a rich and immersive user experience is user input. Almost all cases of XSS vulnerabilities originate from user input and some would go as far as saying all cases. As mentioned at the beginning of this article, user input can originate from a number of sources such as form inputs, blog post comments and query string parameter values of a direct URL for just a few examples. In each of these examples, the user, an external source, is providing information to our web application, that we have the potential to do something with.

Therefore, when it comes to understanding the role of external sources in XSS, we can easily identify any potentially vulnerability within our site where we are allowing user input that has not been properly handled. Before getting to the “properly handling”, let’s look at an easy example to drive the above home.

The Case of the Curious Comment

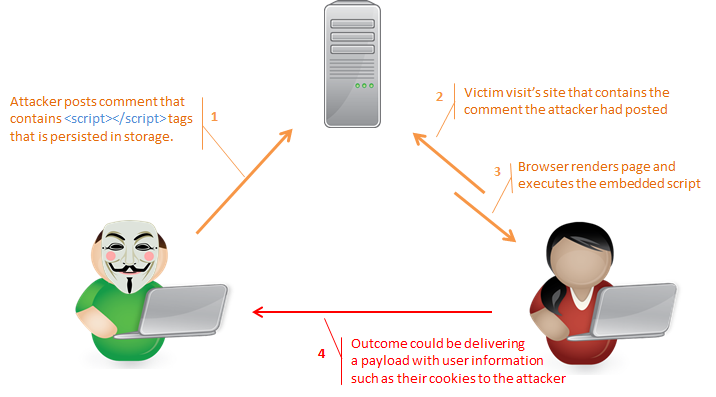

The following example should outline how a malicious script can be injected onto a page that a separate victim, on a separate computer, at a separate location can be directly affected by and what kind of malicious act the script might perform.

Your company’s strict licensing and security policy doesn’t allow the use of third part tools. So the new article comments feature they want to make available on the company’s website must be developed in-house from the ground up. With the new comment feature available for users the following scenario carries out.

In the example, a malicious user ends up being allowed to embed a <script> HTML tag into their comment which ultimately gets saved to storage by the server. When anyone visits the page that contains the comment, the server is going to serve all HTML, CSS, JavaScript and other resources pertaining to the page, to the victim’s browsers. In turn, the victim’s browser will render all HTML and execute any scripts. Scripts such as the one found embedded in the comment that the victim’s browser received from our server.

We can see here that an attack’s user input was able to be injected, persisted, delivered and executed on another user’s computer. The outcome can be any number of results such as the proposed diagram of stealing the user’s cookies which could allow the attacker to impersonate the victim.

Recap

Based on everything so far, you can summarize that a XSS vulnerability can exists anywhere within our web application, that an external source such as user input is allowed to supply information to our application and that information has the potential to carry instructions such as JavaScript, that could potentially be harmful.

So would not allowing user input be the correct answer to remove this vulnerability? Obviously not, the answer is properly handling user input. Treating any externally supplied information as potentially harmful and neutering it.

Fix All the Things!

Again, if you have spent any time reading the numerous resources regarding XSS, you no doubt have come across the abundant number of variations of how this vulnerability can be exploited. However, in all cases, as long as all user input is properly handled AND utilizing additional proactive testing methods, you will greatly reduce your chance of being exploited by this type of vulnerability.

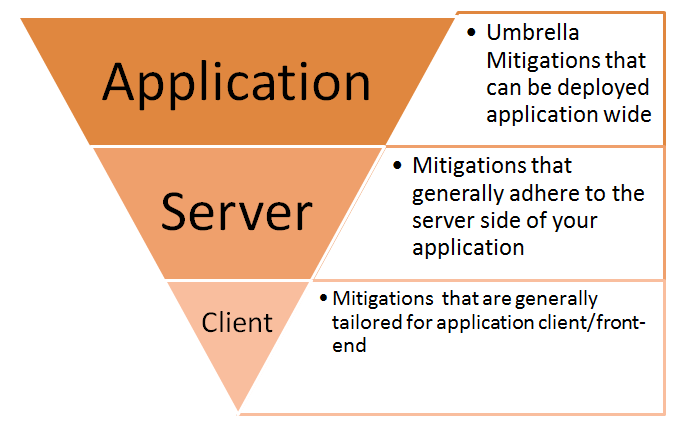

Unfortunately, along with the enormity of possible XSS vulnerabilities comes the vast amount of information on how you should secure your web application from being exploited. Therefore, instead of subjecting you to the fire-hose effect, I have formed a template constructed of 3 building blocks that will help you focus your efforts for applying mitigations to your web application. Furthermore, within those templates, you can easily swap-out or add additional mitigation depending on your applications needs or particular edge cases.

There is a lot of information in the below sections, but unlike other sources of information on how to handle XSS vulnerabilities, the below information should be able to be consumed by focus area and even more granular, per recommended mitigation.

In addition, whitelisting and blacklisting are two popular approaches to take when specifying security rules (in different contexts) that we want our application to adhere to. In almost all cases a whitelist approach is the recommended and most maintainable choice. This is the approach you will see used through the different recommended mitigations either as my recommendation, or a mitigation’s internal approach.

Application

At the application layer we are looking at deploying mitigations that can have a positive application wide security impact. These are usually defense in-depth mitigations that can touch both the client as well as the server.

At the application layer we are looking at deploying mitigations that can have a positive application wide security impact. These are usually defense in-depth mitigations that can touch both the client as well as the server.

HTTP Response Headers

There are a number of HTTP headers that we can pass back on all responses to limit assumptions made by the browser, control browser loading behavior and mitigate user specific security changes. Lucky for us, all the headers we are going to leverage are fully supported by modern browsers. Let’s start off by looking at the header that will have the biggest impact for mitigating XSS vulnerabilities; the Content Security Policy.

Content Security Policy (CSP)

The content-security-policy header allows applications to dictate to the browse what sources the browser is permitted to acquire resources from. There is an extensive list of different web application resources that can be controlled such as scripts, styles, images, audio and video, form actions and embedded resources just to name a few.

If we have made anything clear, it is the ability for XSS vulnerabilities to allow attackers to potentially load and execute scripts from other sources such as an embedded script on a crafted URL in an email. The ability to remove that threat by specifying the allowed sources that the browser can load resources from significantly narrows the attacker’s abilities within our web application.

So in an example where we want to restrict the loading of script and style resources to only our domain would be as easy as specifying the script-src and style-src directive and setting the value to ‘self’.

Content-Security-Policy: script-src 'self'; style-src 'self'

But in a common scenario where we want to load resources from external sources such as CDN’s, we only have to add those domains to the whitelist. We can see this in the following example where we might want to load the Bootstrap script and style resources from Google’s CDN (https://ajax.googleapis.com) and limiting image sources to our domain only with the img-src. We are also utilizing the default-src directive to specify that all resources must be loaded over HTTPS:

Content-Security-Policy: default-src https:; script-src 'self' https://ajax.googleapis.com; style-src 'self' https://ajax.googleapis.com; img-src 'self'

Therefore, in the case where a victim has received a well-crafted link from an attacker that would attempt to load an external script, would be denied since it was not included in the whitelist. It might go without saying, but if a trusted source (e.g. Google’s CDN) was compromised, we in turn would be vulnerable since we trust that source.

Implementing in ASP.NET

In the context of the ASP.NET MVC framework, we could accomplish adding this content-security-policy header to our responses in a number of ways.

Through an Attribute

public class ContentSecurityPolicyFilterAttribute : ActionFilterAttribute

{

public override void OnActionExecuting( ActionExecutingContext filterContext)

{

var response = filterContext.HttpContext.Response;

response.AddHeader("Content-Security-Policy" ,

"default-src https:;

script-src 'self' https://code.jquery.com https://ajax.aspnetcdn.com;

style-src 'self' https://ajax.aspnetcdn.com");

base.OnActionExecuting(filterContext);

}

}

Then we can either enforce this globally by loading it into the ASP.NET pipeline by registering it as a global filter or individually on actions or controllers. Obviously, this is a very straightforward example. But, it would not be difficult to enforce default behaviors (default-src) on all HTTP response headers and layer on granular control at the controller or action level. Or, alternatively, here is an example of how you could utilize the OWIN modular approach to insert on the pipeline.

Web.config / IIS

If using IIS 7 or above, we have the alternative to load it through the web.config by specifying custom headers to be included.

<configuration>

<system.webServer>

<httpProtocol>

<customHeaders>

<add name="Content-Security-Policy" value="'self' https://ajax.googleapis.com" />

</customHeaders>

</httpProtocol>

</system.webServer>

</configuration>

Alternatively to the web.config approach, utilizing IIS Manager, you can apply custom headers through IIS directly.

There is an abundance of flexibility and control options afforded by the content-security-policy header that can provide security and control for other areas that we have not covered here. To get the full details and breakdown of all of the content-security-policy see the W3C documentation.

NOTE: Be aware that besides the value of ‘self’, other directive values of ‘unsafe-inline’ and ‘unsafe-eval’ are directive values that you should absolutely avoid if at all possible. Specifying it as a white-listed value would remove the security benefit afforded by the CSP.

Reflected-xss

Reflected-xss is actually just another directive that is part of the content-security-policy like the script-src and style-src directives we saw above. The difference is that it replaces an older/unstandardized header of X-XSS-Protection that a number of browsers didn’t support. I included it as its own separate mitigation since it is quite different in its intended goal.

Sometimes browsers offer heuristic filters to help filter out ‘potentially’ unsafe scripts that were reflected back to the client because they (user supplied data that contains harmful script as data) came in on the request and are now being reflected back on the response. When the browser filter is turned off by the user, the ability to still enforce this filter can be accomplished by utilizing the reflective-xss directive.

If you set this directive value to ‘block’ you will completely pull the plug on loading any resources on the page when the browser concludes that it has potentially discovered unsafe data in the response. Alternatively, you can filter out the potentially harmful script and allow the site to continue loading trusted resources, by using the ‘filter’ value

Content-Security-Policy: reflected-xss 'filter'

As far as ASP.NET implementation, this directive is added using the same above methods as described under Content Security Policy.

NOTE: This policy (and more so the original unstandardized X-XSS-Protection header) has received some legitimate negative feedback due its ability to interfere with requests/responses that contained cases where legitimate <script></script> was part of the request (such as in the case of wanting to allow it as part of a search). Ensuring HTML encoding can neuter a vulnerability without interference by the filter. So know the limitations of this directive and when affecting user experience, opt for other mitigations such as escaping (output encoding) which we’ll be looking at shortly.

Cookies

A typical target goal by a XSS exploit is to acquire a user’s cookies for a number of reasons which I have gone into extensively in this article/video.

HTTPOnly Flag

We have a few levels at which we can apply this mitigation. At a minimum we can remove the ability to reach the cookie through the browser by utilizing the HTTPOnly flag on the Set-cookie header. So wherever we are setting and passing our applications cookies we can set the

HTTPOnlyflag to true to accomplish this.

In ASP.NET, it can be as simple as setting the

HTTPOnlyproperty to true within a

CookieHeaderValueobject that is then added to the cookies collection on the response header:

var cookie = new CookieHeaderValue ("myapp", nvc)

{

//domain

//path

HttpOnly = true

};

var response = new HttpResponseMessage(HttpStatusCode.Ok);

response.Headers.AddCookies(new []{ cookie});

As you can see there are quite a few application wide mitigations we can apply that cross across a number of application borders. Let’s now focus our attention to mitigations that apply specifically to the server.

Server

At the server layer, we are targeting ASP.NET framework specific mitigations that will generally incorporate a majority of steps we take to remove XSS vulnerabilities.

At the server layer, we are targeting ASP.NET framework specific mitigations that will generally incorporate a majority of steps we take to remove XSS vulnerabilities.

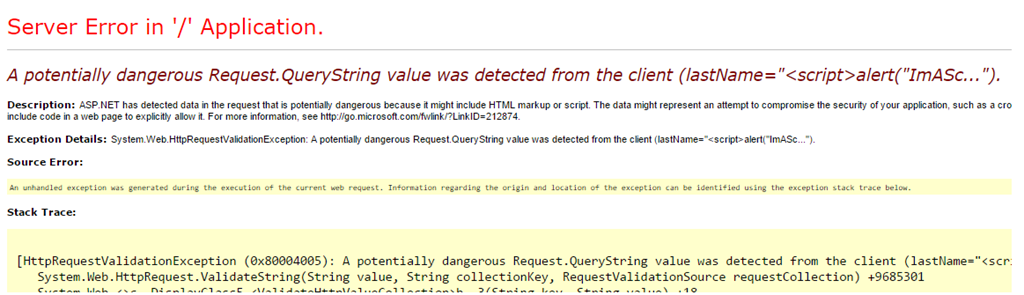

Request Validation

ASP.NET provides an out-of-the box feature that automatically validates all incoming HTTP request called Request Validation. With all the different ASP.NET frameworks and versions available, OWASP provides a quick glance chart at the specific frameworks and their support of Request Validation.

Request validation attempts to detect any markup or code in a request and throws a “potentially dangerous value was detected” error message, returning a 500 HTTP status code.

Important! This is a mitigation that should be viewed as a defense-in-depth approach and absolutely not relied on by itself as a be-all end-all solution.

There doesn’t need to be anything done to enable it as it is active by default. There are cases when you might need to allow valid markup without it being blocked. This can be accomplished by utilizing the

ValidateInputattribute on an ASP.NET controller or action and passing it the value “false”:

[ValidateInput(false)]

public ActionResult UploadMarkup(string someStr)

{

//...

}

However, Request Validation can be completely disabled by following the instructions provided on this MSDN article.

Manual Validation

We just looked at an out-of-the-box feature that ASP.NET provides for validating user input that can be an extremely helpful mitigation in a defense in-depth strategy. However, since we are in control of how our application handles user input, limiting the scope of what type of data can be submitted to our API’s can be another check-point for providing input validation by filtering out invalid data.

ASP.NET provides a number of flexible ways that we can go about manually validating input that reaches our API’s. However, I wanted to focus on a couple simple to implement options for providing instant input validation. We can easily add data validation rules to models in ASP.NET MVC and Web API alike through the Data Annotation library. This allows us to be expressive in what our expectations are for the user input. For the most part, most data annotations are going to apply a whitelisting approach naturally.

Where the tricky part comes in, is validating strings for example. But Data Annotations allow for putting restrictions in place such as a Social Security Number string using the

RegularExpressionAttribute.

public class PersonModel

{

//...FirstName

//...LastName

[RegularExpression(@"^\d{9}|\d{3}-\d{2}-\d{4}$", ErrorMessage = "Please enter a valid Social Security Number")]

public string Ssn { get; set; }

}

When we need to control the logic that goes into validating user input, we can leverage the

ValidatorAttributeclass. This class allows us to create our own annotations when the need arises, and there are a number of great articles to get you started. However, it is easy to inadvertently fall into using a blacklisting approach when using the build-in regular expression data annotation or by creating our own custom data annotation. So be careful of how your logic or expression is being applied.

Content-Type and Character Set

In order to help the browser determine what type of information it received, we can provide it metadata about the information (data) in the form of a specified MIME type label that is specified on the

content-typeheader. Therefore, when the browser receives JavaScript scripts files (text/javascript), HTML (text/html) or a PNG image (image/png), it will know how to process it.

This article is not about MIME types, however, if the correct content-type is not specified, browsers will undergo what is called content or character set sniffing in order to determine what the content-type is for a resource. When the browser doesn’t have a correct content-type or character set (which specifies the encoding), it leaves the door open for the browser to receive external input that tricks the browser to interpreting part of the response as HTML which in turn can lead to a XSS exploit.

Depending on the framework in use, there are a number of ways that you can specify the content-type and character set. Obviously this can be important with ASP.NET MVC or Web API, for returning different payloads. Therefore, if needed, setting the content type might be done at the individual controller action of Web API by setting the Content property on a response:

[HttpGet]

public HttpResponseMessage GetCsv(string fileName)

{

var csv = LoadCsv();

var response = Request.CreateResponse( HttpStatusCode.OK);

response.Content = new StringContent(csv.ToString(), Encoding.UTF8, "text/csv");

return response;

}

Alternatively, this can be accomplished as well through the pipeline in an OWIN module such as how the Nuget package Nancy does.

Data Encoding/Escaping

We have officially reached the mother lode of mitigations to apply to XSS vulnerabilities. This is also where you might also found yourself highly confused if you have done any research before now, with the back and forth and interchangeable terms “encoding” and “escaping” being applied to both HTML and JavaScript. So, let’s take a quick moment and define what they actually mean.

It starts by going back to our original key player “the browser”. When our web application receives information from an external source that we expect to simply be data and instead contains instructions that are masquerading as data, the browser will execute those instructions. So if the heart of the vulnerability lies in the data being subterfuge, then having the ability to notifying the browser that this data should only be treated as data and nothing else, will ensure that instructions can’t be executed. This is “escaping” (a.k.a. output encoding) in a nut shell.

Jeff Williams’s description of “escaping” (output encoding) on OWASP is one of the best I have seen and its role:

“’Escaping’ is a technique used to ensure that characters are treated as data, not as characters that are relevant to the interpreter’s parser. There are lots of different types of escaping, sometimes confusingly called output ‘encoding’. Some of these techniques define a special ‘escape’ character, and other techniques have a more sophisticated syntax that involves several characters.

Do not confuse output escaping with the notion of Unicode encoding. Which involves mapping a Unicode character to a sequence of bits. This level of encoding is automatically decoded, and does not defuse attacks. However, if there are misunderstandings about the intent charset between the server and browser, it may cause unintended characters to be communicated, possibly enabling XSS attacks. This is why it is still important to specify the Unicode character encoding (charset), such as UTF-8, for all communications.

Escaping is the primary means to make sure that untrusted data can’t be used to convey an injection attack. There is no harm in escaping data properly – it will still render in the browser properly. Escaping simply lets the interpreter know that the data is not intended to be executed, and therefore prevents attacks from working.

Implementation

So now that we know that output encoding is the answer to let the browser know that we want the information it is receiving to be rendered as data and not instructions, how do we implement it? Well first off, if you do any researching, you will find a vast amount of rules regarding:

- Are we working in an HTML context?

- What if this is a JavaScript context where we need to encode some data?

- Or maybe this is a blended context where we have HTML within JavaScript?

There are so many rules and variations that must be taken into consideration when doing proper encoding that you are highly prone to make a mistake if you attempt to do it manually, which is never recommended. Therefore, to ensuring that rendering any output that originated from an external source such as user input, relying on an output encoding library specifically designed for tackling this problem is the answer.

ASP.NET and the AntiXSS Library

ASP.NET makes it very easy to encode output and ensure the browser renders it without the jeopardy of any malicious script being executed, but that was not always the case. To have a better appreciation of what we have with @Razor templates, let’s see where we have been.

Early versions of ASP.NET did not encode:

<div><%= model.Address %></div>

Then they implemented a helper that provided the ability to encode output:

<div><%= Html.Encode(model.Address) %></div>

After that, they incorporated encoding implicitly using the new syntax that also ensured that you didn’t inadvertently double encode:

<div><%: model.Address %></div>

Finally, with @Razor, it allowed you to do it all in one step, which provides output encoding.

<div>@Model.Address</div>

However…..

There is one inherent problem with ASP.NET’s native encoding routines; they generally use a blacklisting approach to their validation. Moreover, there are cases outside the above simple encoding scenarios such as providing user input in an HTML attribute. Or possibly there is a mixed context scenario that involves HTML being used in a JavaScript context. In each of these and other similar scenarios, an HTML attribute context is different than working in a JavaScript or CSS context. Therefore, requiring a different approach which I have mentioned before.

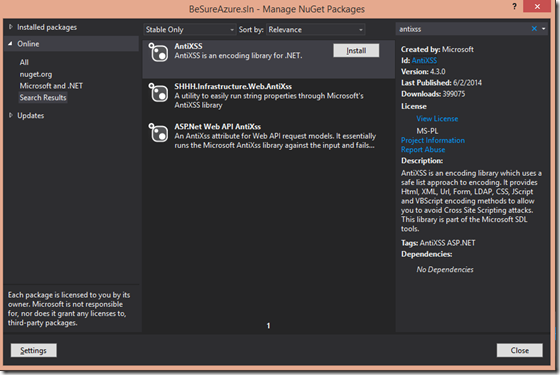

To the rescue is the .NET AntiXSS library. This library uses a whitelisting approach that has starting to be included in even some of the encoding routines of ASP.NET 4.5+ as seen in the new System.Web.Security.AntiXss namespace. The library provides a number of helper functions for both output encoding as well as sanitation (which we haven’t even talked about), that cover HTML, JavaScript, XML, CSS and HTML Attributes.

There are 3 steps to get up and running with AntiXSS library in your ASP.NET application:

Step 1: Include in your project

An easy and quick way to pull the AntiXSS library in through the nuget package, or download and include the assemblies directly.

Step 2: Set AntiXSS library as your default encoding library through the web.config:

We can take it one step further and incorportate AntiXSS as our default encoding library by updating the

encoderTypeattribute of the

httpRunTimeelement of the

web.config.

<httpRuntime targetFramework="4.5.1" requestValidationMode="2.0" encoderType="System.Web.Security.AntiXss.AntiXssEncoder" />

Step 3: (Optional) Utilize the Encoder and Sanitizer

Outside of utilizing the library as the primary encoding provider, it’s not uncommon to need to put user data into contexts that require different encoding syntax based on the context. The library provides helper methods for handling cases where we need to inject encoded user input into HTML Attributes, CSS, JavaScript and URL’s. In other scenarios we might want to allow user input in the form of HTML, we can utilize the sanitation functions the library affords to remove any elements of supplied user input that is not part of the whitelist of safe characters.

<div data-personal="@Encoder.HtmlAttributeEncode(Model.LastName)"></div> <div>@Sanitizer.GetSafeHtmlFragment(Model.PersonalWebsiteUrl)</div>

See the OWASP link for a consolidated list of resources for utilizing the AntiXSS library’s helpers, or you can simply utilize the library’s open-source repository. Visual Studio’s intellisense helps too!

Before going on, this might be a good time to stop and talk a little more about the concept of sanitizing user input and what this means

UPDATE 3/11/15: As bjg pointed out in his comment, the last version 4.3 of AntiXSS library will be the last version to provide a sanitizer. see their notes for recommended sanitizers to consider for future releases.

Sanitation

If attempting to manually sanitize data against a home grown blacklist can be a disaster waiting to happen. This is simply because of an attacker’s creative ways to sneak data past your sanitation checkpoints is forever changing. Therefore, this is another chance to drive home the importance to leverage a library that’s main purpose is to facility proper sanitation. We’ll talk more about sanitation when we look at AngularJS’s implicitly use of sanitation under the Client.

I Am Persisting This User Input, When Should I Encode It?

A common question I hear asked is “I am persisting this user input, when should I encode it?” Fair enough question, seems logical that a conclusion to do it before saving it would be expected. However, the general consensus is that it should not be done before persisting it to storage but do it when it is ready for display in an output context.

This is recommended so that we can completely eliminate the following scenarios:

- Double encoding.

- Assumptions that it was already handled properly.

- Possible data loss through truncation because encoded data is larger than original user input.

- Application requirements change. The need to consume the data differently (in a non-encoded state) is a real possibility.

- Less prone to errors – see #2.

If there is any take aware from the server side mitigations, it’s understand the importance of escaping (output encoding) user input, but leveraging the properly library to ensure it is facilitated correctly based on context. However, these mitigations outlined here will help fortified the server side of the web stack. Let’s now turn our attention to what we can do on the front-end client.

Client

With the ever growing popularity with JavaScript frameworks (specifically SPA’s), the need to be cognitive of the techniques (if any) that are used to properly handle output is paramount. Here we’ll look at some cases where the client can be isolated to specific vulnerabilities that you need to be aware of, as well as the security measures used by the popular AngularJS framework.

We have covered a lot, and probably would cover a vast majority of all XSS vulnerabilities. But, for some time now, the workload for web applications has taken a major shift to the front-end of the web stack. Because of this, the possibilities of the front-end circumventing measures you have on the back-end are even more prevalent. With this shift we have seen the increasing use of a front-end. If you haven’t taken the time to examine what security measures your front-end framework of choice offers, now would be the time.

Every framework is different as well as the amount of importance they put on security. To give an example of what I mean about be cognitive of your front-end frameworks security measures, let’s look at one of the current popular JavaScript frameworks.

AngularJS a popular JavaScript framework does a lot out-of-the-box to provide mitigations against XSS attacks. Without any additional work on your part it will output encode HTML that it attempts to bind to the DOM (e.g. ngBind, ngRepeat, etc.).

<li ng-bind="todo"></li>

However, if you have HTML you want to have rendered as HTML and not just encoded output, they make it possible through the ngBindHtml directive:

<li ng-bind-html="todo"></li>

However, doing this will render you an error regarding your attempt to render “potentially” unsafe HTML:

Error: [$sce:unsafe] Attempting to use an unsafe value in a safe context.

As an additional security measure, AngularJS requires that at minimum you provide it a sanitizer which is done by providing it the ngSanitizer service as an application dependency.

angular.module('questionnaire', ['ngRoute', 'ngSanitize'])

Then, using a whitelist approach, Angular will sanitize (remove) any potentially harmful data before rendering the HTML.

However, when you want to render user input as HTML, Angular provides a Strict Conceptual Escaping service that allows you to do just that. Through a number of helper methods that make rendering user input as HTML in different HTML, CSS, JavaScript and URL contexts.

Finally, one last tool provided by Angular is its ngCSP directive which ties into the Content Security Policy we talked about earlier. It forces Angular to take a more stringent approach to JavaScript evaluation and will no longer take eval based shortcuts but also reduce performance by 30%.

This isn’t an article on Angular security and I will leave it to other articles to take you through the implementation process of the above Angular security features. However, as we have seen, the ever increasing shift of responsibility to the front-end has made way for more and more front-end frameworks to spring up. Therefore, it is paramount to be cognitive of what security features your framework of choice has or lacks.

Tools

WhiteHat Security founder Jeremiah Grossman coined the term “hack yourself first” talking about going on the cyber offense. The idea is to rattle your own web application’s cage to see exactly how weak it is. Because, truth be told, we can do all this, but will you have caught all XSS vulnerabilities within your web application? How would you know? Magically, you cannot.

That is why it is highly recommended to employ tools such as an XSS Scanner. However, this is not an article on how to use an XSS Scanner or to promote one over another. Tools are changing, losing support and new ones are coming out quite regularly. Google has launched their free and open-source XSS scanner Firing Range that might be worth checking out. But, it is highly important that you take proactive steps to ensure you have found and mitigated any XSS vulnerabilities within your application.

The Take Away….

When it comes to understanding XSS vulnerabilities, exploits and mitigations there is a lot to consider and cover. However, we can make this easier on ourselves if we adhere to the following 6 take away points:

1. Understanding how the browser’s role as well as the role of external sources work in tangent and are the key players involved when it comes to understanding XSS.

2. External sources and more precisely, any form of user input must be untrusted, assumed malicious and handled properly.

3. When applying mitigation techniques, leverage a whitelisting approach is the most safest and easiest to maintain. When choosing tools (e.g. AntiXSS library), try to pick ones that also leverage the whitelisting approach.

4. Use the Application, Server and client focus area’s to help make mitigations more digestible and more consistent in coverage.

5. Understand the different contexts where data can be encoded such as JavaScript, CSS, HTML and URL and utilize a XSS library for applying the appropriate encoding.

6. Finally, understand the importance of hacking yourself first and utilizing tools such as XSS scanners to check your work.

There is a reason why XSS is one of the most prevalent and costly web application vulnerabilities in the wild. It is easy to discover, easy to exploit and as software developers, easy to introduce. Therefore, we must be cognitive that anywhere in our web application that user input is being accepted, it must be handled properly.