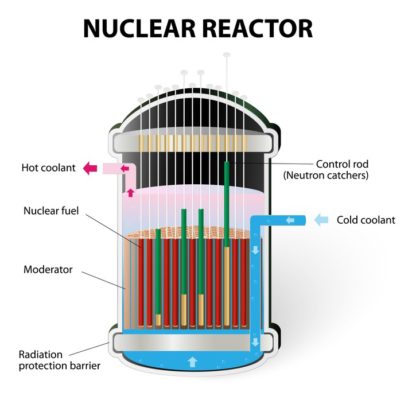

There are a number of moving parts in a nuclear reactor, all of which play a vital role in the reactor’s overall health. There is a delicate relationship between the components and a deficiency in one can cause an overall failure of the reactor. We have seen such a scenario play out with the Chernobyl disaster or even the recent Fukushima Daiichi disaster when ultimate failures to nuclear reactors brought complete widespread devastation.

When we consider all the possible moving parts of a web application stack from a security perspective, there is an overwhelming number of similarities to the nuclear reactor example such as the vast surface area including a non-exhaustive list of front-end client frameworks, web and application servers, platforms and databases, the integral dependency of each part on the whole and the potentially devastating business impact due to a exploited vulnerability in any one of these parts.

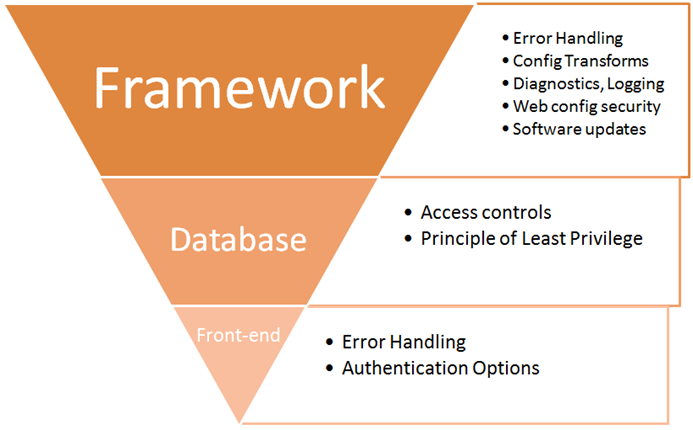

Security Misconfiguration is a term that describes when any one part of our application stack has not been hardened against possible security vulnerabilities. OWASP has listed Security Misconfiguration as #5 of their top 10 most critical web application security flaws. The term security hardening is a broad term but can consist of: proper error handling, ensuring all software is up-to-date for OS, libraries, frameworks, databases, etc., proper handling of default accounts and passwords, all software specific security guidelines are followed and the list goes on. However, our focus will be areas that we as developers can have the biggest impact and take immediate action when running with ASP.NET and while looking at the database and front-end of the web stack.

Our Approach to Security Misconfiguration

If you have been following all the previous articles in this series, there has been two resounding key points that have continued to surface when you’re analyzing each of these security flaws that might not be immediately obvious: authorization failure and application leakage. I bring that up now so you have an opportunity to see the pattern that continues to surface.

Due to the monumental size of this security flaw, we are going to approach it with a template as we have in the recent past to help keep us focused on the designated areas that we are going to address. We are also going to limit our focus to an ASP.NET and AngularJS scope.

Web Application / Framework

Because ASP.NET is an all-encompassing web application framework, there are a number of areas that out-of-the-box are vulnerable to security misconfiguration and require taking explicit action to harden from possibly being exploited. this is where we’ll spend a bulk of our time addressing error handling, logging and tracing, sensitive data exposure and package management, so let’s get started.

Because ASP.NET is an all-encompassing web application framework, there are a number of areas that out-of-the-box are vulnerable to security misconfiguration and require taking explicit action to harden from possibly being exploited. this is where we’ll spend a bulk of our time addressing error handling, logging and tracing, sensitive data exposure and package management, so let’s get started.

Error Handling

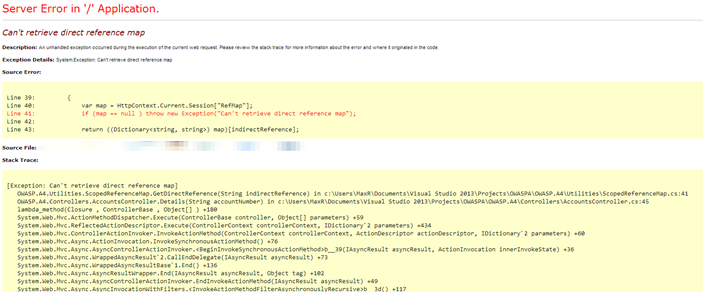

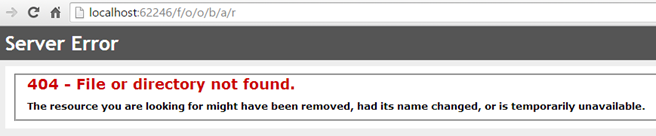

There are quite a few different ways to handle errors in ASP.NET MVC and Web API, whether through error handlers, filters and overrides, as well as granular application of error handling at the action or controller level. But, very likely you have experienced those edge cases where some exception has managed to bubble up past your custom control gates unhandled and you have experienced a error message such as:

Proper error handling in a production environment is all about ensuring we don’t leak application information. This is not only because of the possibility of disclosing sensitive data within error messages, but also because of the high probability that a malicious user could use that information in ways you would have never envisioned.

A perfect example might be how we indirectly disclose information about internal libraries we use in our application that would allow a targeted attack. Take an example where an application has disclosed that they are using Entity Framework. Imagine a zero-day exploit for EF is discovered, this could allow the attacker to possibly focus in on this information. In addition, if a YSOD doesn’t directly expose a security flaw, it could allow a malicious user to add to a profile they are building on your application.

ASP.NET has taken steps to set certain error handling application settings to values that are security conscientiousness. Unfortunately, serving custom errors properly in ASP.NET is not easy. “What you talking ‘bout Willis?” I can hear many of you saying. However, I am not hear to convenience you of that, nor are we here to cover different ways to handle errors within the application. What we are here is to accomplish the following:

- Using custom error pages to ensure we don’t leak application internals.

- Returning the proper HTTP Response Status

- ‘Covering Your Assets’ when ASP.NET lets us down or assumes the host will handle the error

We can control the application’s custom error behavior at the application level by setting or adding the <customErrors> element inside <system.web></system.web> in our web.config. Wiring our application to use custom errors and redirect users to our error.aspx page would look like the following.

<customErrors mode="On" redirectMode="ResponseRedirect" defaultRedirect="/error.aspx">

Here we can set the mode to “On” to ensure that error messages are never disclosed (at the application level – more on this in a moment). The default is “RemoteOnly” and one of those security-first ASP.NET default settings. However, “RemoteOnly” will still divulge errors locally.

[alert type=”info” icon=”icon-help-2″]The fact that our error page is saved as a Active Server Page Extension is one of those ASP.NET hoops required in order to send back the correct content type (text/html) which properly allows the HTML of our error page to be render properly.[/alert]

With these settings, running into an internal error would result in the following URL redirect:

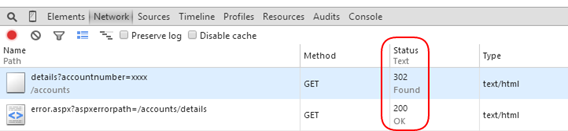

We’re returning our custom error, but we see that it has redirect to our error page as indicated by the URL. But worse, is what you might not notice, and that is the HTTP status code that is returned.

It’s issuing a 302 HTTP status code for the redirect, but the kicker is how it’s returning a 200 for the redirect response which is absolutely not correct, let alone due to SEO reasons. We can solve this by changing our redirectMode from “ResponseRedirect” to “ResponseRewrite”.

<customErrors mode="On" redirectMode="ResponseRewrite" defaultRedirect="/error.aspx" />

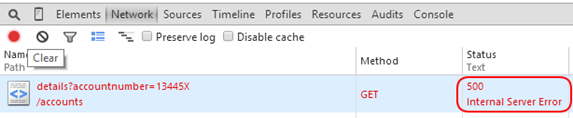

Which we can see how the URL is preserved /details?accountnumber=13445X:

Better yet, it also ensures we return the proper HTTP response status code:

[alert type=”info” icon=”icon-help-2″]Depending on the use of any of the ASP.NET Visual Studio templates some default error views might be in effect and be served under certain circumstances. We are making the assumption none exist, similar to an empty project template. [/alert]

We can also get more granular within our <customErrors> section and specify the pages we want to return for specific HTTP status codes:

<customErrors mode="On" redirectMode="ResponseRewrite"> <error statusCode="404" redirect="/404.aspx"/> <error statusCode="500" redirect="/error.aspx"/> </customErrors>

Be aware, that under certain circumstances, such as a 404 being handled in the ASP.NET pipeline and serving up our 404.aspx page (see above example), in order to return the correct 404 HTTP status code, a <%Response.StatusCode = 404%> will be required to be added to the top of our page our page.

Now, we have only been talking about internal errors that would generate HTTP 500 status codes and handled at the application level. However, there are times when errors have the potential to bubble up past the framework or ASP.NET decides to hand off the responsibility to the hosting provider such as IIS. Therefore, specifically considering a scenario where IIS is the host and we attempt to navigate to a route that doesn’t match such as /F/o/o/b/a/r:

There are other cases such as returning an HttpNotFound() ActionResult that would also trigger IIS intervention. But as you can see we’re no longer serving up our error message. Therefore, the need to cover these cases, we can add IIS specific configuration settings by adding the <httpErrors> element to the <system.webserver> section of our web.config.

<httpErrors errorMode="Custom"> <remove statusCode="404"/> <error statusCode="404" path="404.html" responseMode="File"/> </httpErrors>

By default errorMode by default is set to “DetailedLocalOnly” and another one of those security-first default settings. However, we can lock down error details even further by setting it to “Custom” and be consistent across all clients. Finally, <httpErrors> configuration allows us to be able to specify static HTML files to ensure that we can serve up custom errors when something unexpected occurs with ASP.NET and we are operating out-of-bounds of the framework.

In addition, with the responseMode set to “File”, we can ensure our static file specified in path without changes to the response http status code.

Machine.Config

While our web.config governs a number of application specific configuration settings, the machine.config governs a number of relevant settings for the computer the application is being hosted from. Why do I bring this up? As with a number security vulnerability mitigations, we can layer our defenses to help with ensuring those times that something is forgotten, not realized or unexpected and this is one of those times that’s no different.

In a production environment, we might want to pull out all the stops and ensure that we have properly disabled all system generated error messages, debug capabilities and trace output for remote clients. We can do that by utilizing the

<deployment>element in the machine.config:

<deployment retail="true"/>

Depending on your versioning, you can find your machine.config here. Also, I demonstrated the use of the <deployment> element, but this can also be implemented in web.config reducing the wide net affect by machine.config. However, utilizing the machine.config can help ensure settings are not inadvertently overridden when you want to positively ensure retail=”true” is set no matter the web.config relevant settings.

Web Configs, Transform!

Where’s your Optimus Prime voice when you need one? Up until now, all our configurations have been with the strictest setting. Therefore, when you want to control your <httpErrors> and <customErrors> settings based on the deployed environment such as development and testing, you can have the luxury of utilizing Configuration File Transforms.

Most likely your familiar with default Web.Debug.config and Web.Release.config build configs? You will see a decent amount of information retained in the comments to help you get started, but we could easily transform our <httpErrors> mode for a different environment:

<system.webServer> <httpErrors xdt:Transform="SetAttributes(errorMode)" errorMode="Detailed"/> </system.webServer>

Tracing, Diagnostics and Logging, Oh My!

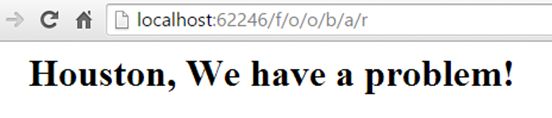

When we think of tracing, diagnostics and logging we might naturally think of explicitly different tasks within our application. However, in actuality, they share a primary main goal which is to collect information about our application. It is that information that is accumulated that could be used to expose a security flaw within our application, extract sensitive information or add to that profile a malicious user is assembling that we have been talking about.

There are a too many tracing, diagnostic and logging libraries, each varying in their ASP.NET support, to do a complete rundown of all the proper library security best practices. Therefore, I have chosen a few of the more popular libraries, each for their distinct purpose to talk about the security concerns when not properly hardened and what you need to be aware of. Even if we don’t discuss the library you are using, it should be clear as to what you want to be aware of when it comes to ensuring the tools you use to gather application information are not subjecting your application to a security misconfiguration.

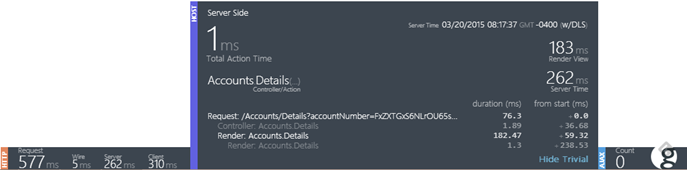

Glimpse

Glimpse is one rock start diagnostic tool that makes diagnostic gathering dead simple. If you have used it, you’ll know exactly what I mean.

However, it also has the potential to expose sensitive data if unchecked by simply exposing your application’s internals. Out-of-the-box, it’s dead simple to turn on, but Glimpse does take steps to ensure that reporting abilities are only available locally.

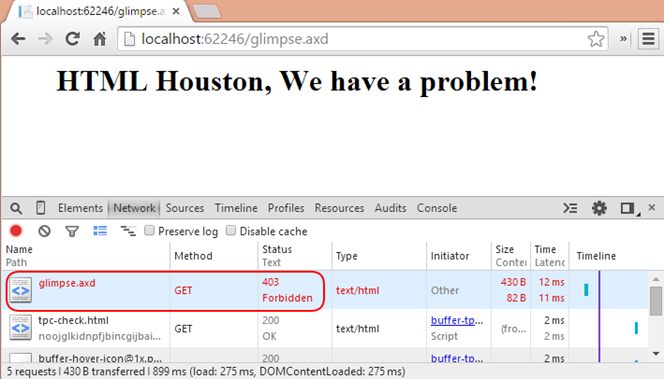

However, like other resources, the glimpse.axd is a resource that provides a control board to glimpse capabilities within your application. Protecting this resource should be a priority and ensuring that just anyone can’t access it.

At a minimum, we can again use configuration file transforms to completely turn off glimpse in our production Web.Release.config:

<glimpse xdt:Transform="SetAttributes(defaultRuntimePolicy)" defaultRuntimePolicy="Off"></glimpse>

This in turn will return a 403 forbidden HTTP status code. With some additional <error> elements in our IIS <httpErrors> section, we could easily server up a custom error if we chose too:

<httpErrors errorMode="Custom"> <remove statusCode="403" /> <error statusCode="403" path="403.html" responseMode="File" /> </httpErrors>

The only problem is that we return a 403 forbidden HTTP status code. While this is appropriate and correct, there is a fundamental concern with informing the user that the resource they don’t have access to, does indeed exists.

There has been tons of debates on whether forbidden and unauthorized resources should be obscured from a malicious user. I am one of those advocates that if we can take a step in obscuring the existence of a resource the user doesn’t have access to, should be taken. Unfortunately, the hoops required to a actually return a 404 not found instead of the 403 is not easy and does require a hacky-ish approach to accomplish. However, this article is already long as it is and I am leaving this for a post in the very near future.

Finally, Glimpse does provide a middle ground where we can programmatically provide security policies that it will evaluate on whether someone should have access to the Glimpse.axd resource and at what level of diagnostics should be performed. They make this so easy, they even provide an example in GlimpseSecurityPolicy.cs that all you need to do is uncomment in order to put one in play. You can read more on using security policies here.

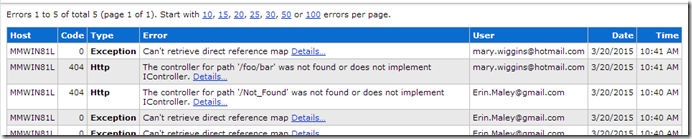

ELMAH

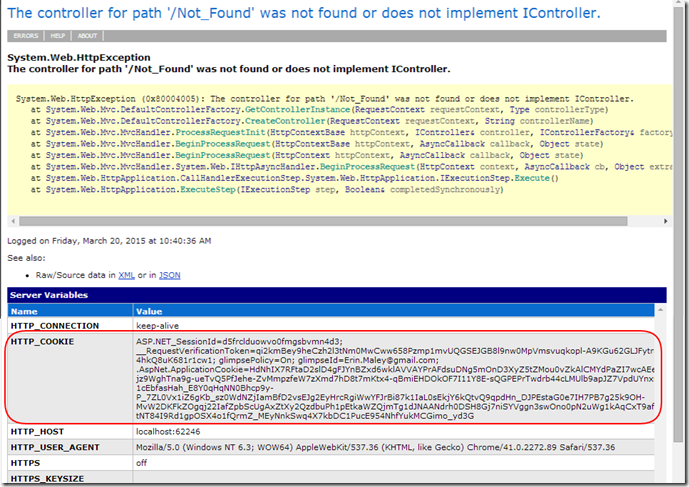

ELMAH is the popular exception handling facility for ASP.NET application that makes your life a lot easier when troubleshooting. They also make it very simple to drop ELMAH into an application and start collecting a ton of information on unhandled application exceptions. Similar to Glimpse, ELMAH makes accessing that information ever so easy through a web page associated to your application’s domain.

When we talk about sensitive data exposure, ELMAH due to the nature of the tool is at the top for potentially exposing sensitive data or data that can be used for malicious against our application.

Therefore, just as with Glimpse.axd, we must take the same necessary precautions to ensuring accessibility to these tools by just anyone anywhere is not possible. ELMAH does turn off remote access all together, but if you needed remote access or you wanted to regulate access to ELMAH, there is a couple ways.

1) As outlined by ELMAH’s security documentation you can add a a <location> section to your web.config and specify access controls:

<location path="elmah.axd" inheritInChildApplications="false">

<system.web>

<httpHandlers>

<add verb="POST,GET,HEAD" path="elmah.axd" type="Elmah.ErrorLogPageFactory, Elmah" />

</httpHandlers>

<authorization>

<allow roles="admin" />

<deny users="*" />

</authorization>

</system.web>

<system.webServer>

<handlers>

<add name="ELMAH" verb="POST,GET,HEAD" path="elmah.axd" type="Elmah.ErrorLogPageFactory, Elmah" preCondition="integratedMode" />

</handlers>

</system.webServer>

</location>

Even use configuration file transforms again to regulate required authorization to specific environments such as in Web.Release.config:

<configuration xmlns:xdt="http://schemas.microsoft.com/XML-Document-Transform">

<location path="elmah.axd" inheritInChildApplications="false" xdt:Transform="Insert">

<!--same as above—>

</location>

</configuration>

2) Another nice option is what they have done with ELMAH MVC which has been around a few years. This library adds additional ease of use for properly securing ELMAH in ASP.NET MVC applications.

Tracing

I think you are seeing the importance of ensuring surface areas of our application are properly configured from a security standpoint. It wouldn’t be hard to continue on for a number of other popular data gathering tools such as Glimpse and ELMAH, but I’ll just tidy up this topic by quickly talking about securing standard ASP.NET Tracing and Debug capabilities.

If you’re using Tracing you want to ensure that you are rolling it out in production preferably completely remove it:

<system.web> <trace xdt:Transform="Remove"/> </system.web>

Web.Config

While we have been heavily focused on the importance of securing our data collection components of our application, another related area that happens to also reside in our web.config is our database connection strings. I am going to make the assumption that you recognize the volatility of a raw database connection string and not reverberate the security risk implied if that information was easily accessible.

In development, we might see a typical connection string:

<connectionStrings>

<add name="queryOnly"

connectionString="Data Source=.\;Initial Catalog=OWASP;

User ID=query;Password=SomePassword;MultipleActiveResultSets=True;

Application Name=EntityFramework"/>

</connectionStrings>

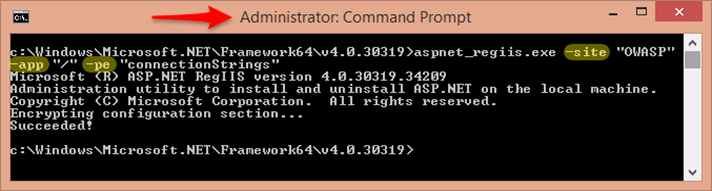

Therefore, having the ability to hide that information through encryption for production use is absolutely essential. One obvious benefit to encrypting this information is any access to the file will not automatically reveal your database access. If you do some searching you’ll find ways to automate this, but running with the barebones, we can utilize the ASP.NET IIS tool aspnet_regiis to encrypt different sections of our web.config.

Essentially you are going to run a aspnet_regiis command from a command prompt and provide information related to your application so the tool can encrypt parts of our web.config that we identify. If you’re site is hosted in IIS a command would look like the following:

c:\Windows\Microsoft.NET\Framework64\v4.0.30319>aspnet_regiis.exe –site “<sitename>” –app “<web.config-location>” -pe "<section-name>"

Here we have started from the directory of .NET that is associated with our application to access the aspnet_regiis executable. We would specify our application –site name that is in IIS, and define the folder level the web.config will be found. Finally, –pe allows us to specify the appropriate section within our <configuration>.

For our concerns, we want to encrypt the <connectionStrings> section.

[alert type=”info” icon=”icon-help-2″]The MSDN documentation doesn’t mention the requirement of running the tool as an administrator, but I have come across more than one article pointing this possible requirement. I haven’t tested it, but you might want to ensure “Run as Administrator” when running the command prompt to ensure there are not decrypt issues.[/alert]

The results will look something like below:

<connectionStrings configProtectionProvider="RsaProtectedConfigurationProvider">

<EncryptedData Type="http://www.w3.org/2001/04/xmlenc#Element"

xmlns="http://www.w3.org/2001/04/xmlenc#">

<EncryptionMethod Algorithm="http://www.w3.org/2001/04/xmlenc#tripledes-cbc" />

<KeyInfo xmlns="http://www.w3.org/2000/09/xmldsig#">

<EncryptedKey xmlns="http://www.w3.org/2001/04/xmlenc#">

<EncryptionMethod Algorithm="http://www.w3.org/2001/04/xmlenc#rsa-1_5" />

<KeyInfo xmlns="http://www.w3.org/2000/09/xmldsig#">

<KeyName>Rsa Key</KeyName>

</KeyInfo>

<CipherData>

<CipherValue>W2FOi8XzHhBgwXyICnzhJMwMdNVRr8zJRpphRb5B5G1sNUxmruRjJ6zJYj17b3aQ6SDuaExd3cGz3pmT6pr7R6qKLnwXb9LjDJDWoqpRjFBVTYPispZBzErKA0f6kqfq8GC/iud5hjz5lGQDS/wI4dhctRJuDfj4TqPfhJSMkzMvDjqtt1gKWP8n1hvFcvC0FXxMXb1rqLHUebOfeyBWC0dnyT75HaovyLORGPqhz0scmToTydWEax4mKJHj6I0VKq7g2aNP+6Tb3utMG1bLGaV6Iwo39groFQEwh7efEL7EsRMhK5Q+CVYaWMifeoeWSUFF4Q7tcHWoAtTZZuo9jQ==</CipherValue>

</CipherData>

</EncryptedKey>

</KeyInfo>

<CipherData>

<CipherValue>jIKxdvBHeJlg5EP440mVCCblfdYdbgzsG3fFQoJ7tK/gOLXwqgL2TxG+wj1yURQb2fIusWlTK0wVHS2abcV7EEZffl3/etRSlK1x3Iw471S/MRsp1XnGRqSVSSzUeMDTSKTEF9l/daMD6aLougGbGQoOSahD+Oksg3O5qPj1aEO3yP/jybGIp4HrCbaWRamshN8fv1/sO3l+8ztv0l7IaKoidt7YVZCjIuFXYIXz9iFHdaSZ5Px+xNNR30dSqnP67tF7ajXgtN5ejOcsig89tg1KEUpo9o9p1ftdCjuuFwYkZE+z7Otl+jRtDow9BbOGdwfNoonDYYKkeMzvNSu09n9Nsd52wc2mGhKnOUHcLlacnp3vQOs1ehbt0nWsZ4a/h0GJJvuiWkEUJQo+5y2+N8pB+iOAzQdNps6EqzvlXMXJztSUMfBMGck3iyhb2/u5Yg2pBNWZKH83pgZn2Cvlrf3Orxqpvl7di4MHckFDMBJair6/VC9I2oIzI63O7QU5yihcl3On3JMUB0iVVHXE3Vtp6uG4MipayNkSKyaB9SOcsp3/KQ1yyltk97O177TTAZ3H7nGA+mI0EDl0ChOA2ANRJBpsgunm7D/ZoYTJOlRVSocUMHFRCnKhEMwln5F1Z1bvYKou9xVwReQURU0a8kjbkYERlKsOfbJaV+53R3+9RzUxlsOgSnkhUzuEYp/X9ptN+NO2F4M91COsIoewLUksPGzRrlTM+VcAZJNrf5mCqpNUebSYrwWc3VkgyTxtmr3llroPFdqwGGmmf52DdtbQwjlCOnYDmq2CFDW1XdRxkulDB1rmEYMJgdGF6RVw5D3FivDeBTC3q6shFq6GdGJAAHvFCN+dR32LWAZrZxB64Jq7P3H96+r3JFAzk5Nh</CipherValue>

</CipherData>

</EncryptedData>

</connectionStrings>

Finally, a last note on the RSA keys being used; in the examples shown we have taken all the defaults regarding where the key used for encrypting and decrypting is stored. Therefore, in these examples, we can’t just up and move the application file with the web.config to any computer as the key to decrypt would not be available. However, aspnet_regiis provides a fairly robust set of options when it comes to specifying the locations and other qualities of the keys involved, you’ll just need to consult the documentation.

[alert type=”error” icon=”icon-cancel-2″]Unencrypted connection strings can allow a malicious user who has obtained that information, a direct leap-point to a complete separate layer of our security misconfiguration template, the database (which we’ll be reviewing shortly).[/alert]

Are You Down with OPP?

Other Peoples Programming: Libraries, Packages and Frameworks Up to Date?

This is quite an easy and straight forward, but unless you are writing everything from scratch (which we know you aren’t or much of this article would be of no use) you are relying heavily on 3rd party libraries and frameworks to help build your web application.

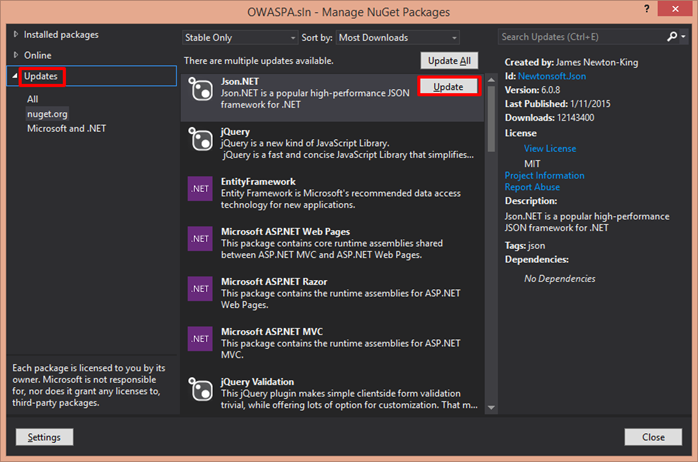

That being said, it is still sometimes easy not to habitually check if there has been new releases for the libraries, packages and frameworks you are using. Really good authors patch security vulnerabilities quick, but the longer you reside on an older version, the longer you are at risk despite the fact that the author has patched that vulnerability.

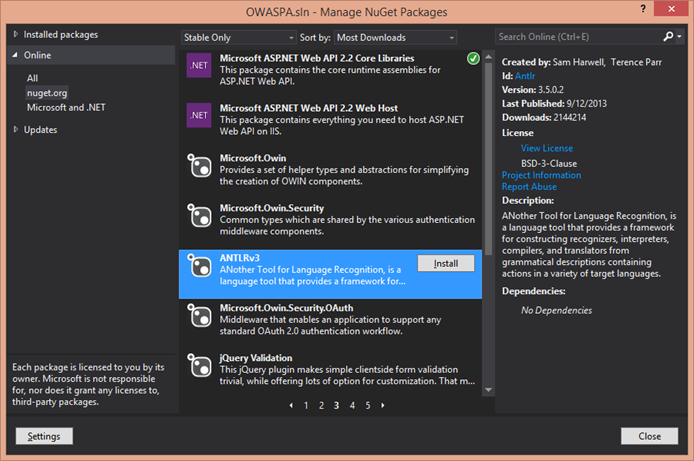

By now, NuGet has established itself as the preferred package manager for ASP.NET applications making library and frameworks easy to install:

But from a security standpoint, an even better features is the ease of updating those installed packages when authors release updates to their libraries and frameworks.

Unfortunately, we all have worked on those projects that all 3rd party libraries and frameworks are not available through a package manager like NuGet. Therefore, you need to find some routine/system/way to ensure that you can keep OPP up to date within your application for those that have been manually incorporated into your application.

Database

When we think of an application, it’s not easy to consider a scenario where some type of database is not involved. It’s one of those essential essentials. For a bulk of web applications you come across, data is the life blood of that application. Though a database might not be the only option for accessing that life blood, it is definitely the most predominant and that includes your cloud applications (what do you think in many cases sits behind those storage services).

When we think of an application, it’s not easy to consider a scenario where some type of database is not involved. It’s one of those essential essentials. For a bulk of web applications you come across, data is the life blood of that application. Though a database might not be the only option for accessing that life blood, it is definitely the most predominant and that includes your cloud applications (what do you think in many cases sits behind those storage services).

We just wrapped up discussing the ability to protect keys to our database from the framework layer. Now, we’re going to look at protecting the data that the database is hosting using our good friend, Principle of Least Privilege. The basic concept is that we only provide the bare minimum required privilege for the action being performed. We performing a query on the data, why the need for write privileges if we’re only performing a query? Why full database access when we only need to update a single table?

All these questions point back to why it’s easier for developers to simply have one database connection using the same account that has full or near-full privileges to the database. However, this is also why when mitigations against other security risk such as SQL Injection attacks fail, we can provide additional layers of protection by mitigating the level of database access.

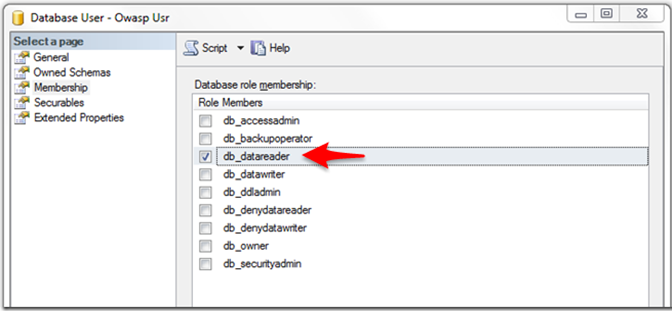

For us, this basically means diversifying the accounts the application uses and enforcing that each account operates under the minimal permissions needed to carry out its objective. In SQL Server, we can accomplish this by specifying the role of a specific database user such as db_datareader:

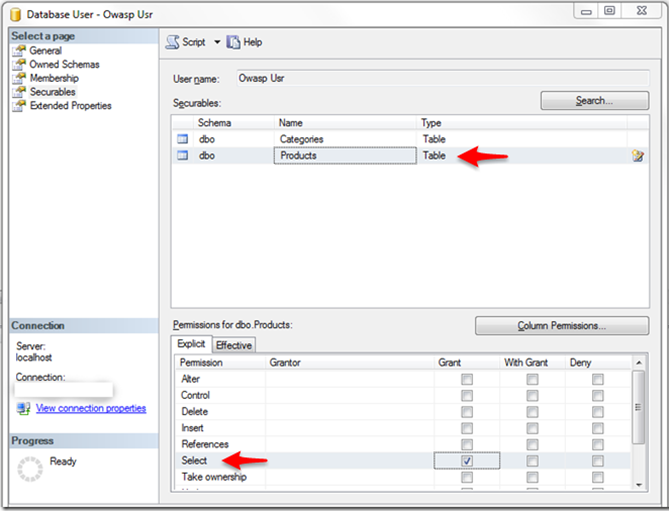

In addition, we can be more restrictive and define exactly what objects and the permissions on those objects that the account has. Again with the SQL Server example restricting this user to only perform SELECT statements on a table called PRODUCTS is done at the user level

What does this do for us? Continuing to use the example of a SQL injection attack, the restriction to the appropriate account with minimal permissions would ensure the execution of a malicious write command could not be carried out under the account that only has privileges to query.

NOTE: This wouldn’t stop a malicious read query that attempts to leak unintended records or database schema information, but that would be covered under the safe guard of query parameterization.

Finally, properly securing SQL server is not strictly regulating user roles and privileges. Therefore, keep in mind there are other SQL hardening steps that need to be considered. It was only very recently that it was discovered that nearly 40,000 mongo databases were insecure simply because the owner failed to read the documentation related to security. However, from a developer’s perspective, the above is going to be ways that you can make a direct and immediate impact on the quality of security within your application.

Front-end Client

I first introduced the concept of considering the front-end of the web stack when we talked about dealing with XSS security risks. Though, you might notice OWASP’s documentation doesn’t explicitly name front-end frameworks and libraries, but that doesn’t mean that it isn’t an area we don’t have to consider.

I first introduced the concept of considering the front-end of the web stack when we talked about dealing with XSS security risks. Though, you might notice OWASP’s documentation doesn’t explicitly name front-end frameworks and libraries, but that doesn’t mean that it isn’t an area we don’t have to consider.

Again, we can’t cover every aspect possible with a plethora of JavaScript frameworks let alone, libraries. What we can do is using AngularJS, bring to light security configuration to consider as well as some broader area’s to keep in mind.

Error Handling

When we introduce a front-end framework, nothing changes when it comes to properly error handling. We still need to be mindful of what information is being propagated to the user. Disclosing information not meant to be seen by the user because the proper control gates were not in place, or sending the wrong message because of inconsistent behavior doesn’t change with a SPA.

Angular makes it possible to ensure a consistent user experience when we receive HTTP status codes from the server through the use of interceptors.

(function() {

// 'use strict';

var errorApp = angular.module('errorApp', ['ngRoute']);

errorApp.config(['$routeProvider',

function($routeProvider) {

$routeProvider

.when('/', {

templateUrl: 'home.html',

controller: 'HomeController'

})

.when('/login', {

templateUrl: 'login.html'

})

.when('/404', {

templateUrl: "404.html"

})

.when('/error', {

templateUrl: 'error.html'

})

.otherwise({

redirectTo: '/'

});

}

]);

errorApp.factory('httpResponseInterceptor', ['$q', '$location', function($q, $location) {

return {

response: function(data) {

return data;

},

responseError: function error(response) {

switch (response.status) {

case 401:

$location.path('/login');

break;

case 404:

$location.path('/404');

break;

default:

$location.path('/error');

}

return $q.reject(response);

}

}

}]);

errorApp.config(['$httpProvider',

function($httpProvider) {

$httpProvider.interceptors.push('httpResponseInterceptor');

}

]);

})();

NOTE: The above is only an example and we are applying a broad stroke behavior. It wouldn’t be applicable if you needed to handle individual HTTP responses case by case or per service. The point is to stop and ensure you are not leaking information either through an error or giving away information inadvertently such as when we raise flags to a malicious user with 500 status codes.

CORS

Cross-site HTTP requests is nothing new to web development, but in the ever growing development of SPA’s, CORS is an ever dependent mechanism. In Angular we can influence and impact CORS behavior in our applications through certain core provider configurations such as the $http provider. Being able to provide cookies on cross-site HTTP requests is powerful and convenient.

Angular makes this easily configurable from the provider level so it is just “on” for every request by simply setting the

withCredentials property to true, but should we?:

angular.module(“erroApp)

.config(['$httpProvider', function($httpProvider) {

$httpProvider.defaults.withCredentials = true;

}])

When dealing with credentialed XhrHttpRequests (the underlying request of Angular’s $http provider when we set withCredentials = true) is important to understand the ramifications of these settings. For one, if the cookies being included in the request contain authentication information, you had better make sure the request occurs over secure HTTP (HTTPS).

This might be the time to step back and ensure that a global setting such as the above is the right approach. It only takes once for you to make a AJAX call via HTTP and those cookies would get sent along inadvertently. Now, this can easily be circumvented by ensuring the Secure flag is set on the cookie:

Set-cookie: mycookie=value; path=/; secure

All this talk about credentialed XhrHttpRequests and cookies brings us to our last discussion point on security misconfiguration concerns with the front-end.

Authentication

Authentication directly fits more aptly into a category such as OWASP’s Broken Authentication, but there is a configuration side to most technology and comprehending the pertinent points is crucial. For a considerable amount of time cookie based authentication has been the dominate choice for web application and continues to be widely used. However, for some of that time and more recently we have seen the continued rise and preferred option of token based authentication, especially in the age where third-party authorization services such as Facebook and Google is almost to be expected.

But there is trade-offs which each, and knowing what those are essential. Generally speaking, while Token based authentication might help mitigate CSRF vulnerabilities (something cookies are a prime candidate), it is also not simple and can easily be configured incorrectly.

For instance, understanding the storage options for tokens is essential from a security perspective and probably one of the easier configuration choices to make, but still there is much to consider. While tokens protect you from the CSRF vulnerabilities, when storing tokens in client side storage, you’re once again susceptible to XSS without the proper security measures such as user input with encoding and sanitation.

Understanding how each of the browser treats local storage and session storage in the browser, the lifetime of data and when it is purged is important. Session storage can provide additional security over the local storage just because of the scope of the data limited to a single page and only for the lifetime of the open browser. On the contrary, understanding how cookies can also provide a worthy delivery mechanism (but not used for authentication, simply for delivery of the token).

Conclusion

We have covered allot, I mean, a ton. Truthfully, there is a lot more when you consider web server host security and TLS/SSL configuration security just to name a few others we didn’t touch. If you take anything from here, its understanding that it’s not strictly about propping up an application, slapping on some features to satisfy your client’s requirements and going live. There are many layers to our rich, user engaging applications, each employing different technologies. But, it’s about comprehending that each of these technologies require undergoing proper security hardening steps in order to help ensure the highest level of security for your application.