- Part 1: Containerize your Application Environment

- Part 2: Creating a Developer Image

- Part 3: Hot Module Reloading and Live Editing in Containers

- Part 4: Composing Multi-container Networks

- Sharing Images with Your Team Using Docker Hub(this post)

- Bonus: Debug Docker Containers with WebStorm

If you are just joining, you might be interested in following the Docker tutorial from the beginning. We have successfully completed the modularization of our development environment into various isolated containers and isolated networks using Docker Compose in Part 4. Yet, we were able to maintain the ability to develop our application and propagate live updates to the containers running our application. We’ll see in this post how Docker Hub will help us accomplish the last part of our goals.

Despite these accomplishments, I believe that the true realization of what we can accomplish as developers using Docker comes when we are able to share those images. Which demonstrates the ability of isolating from our host the changes required to run a complex development environment like the one we saw.

Therefore, in this last and final tutorial in this series, we’re going to see the speed at which a team of developers can get up and running on a complex development application environment.

Getting Started Sharing with Docker Hub

You can grab the source code for the application from GitHub. For the most part, there isn’t any major changes, only minor image name changes so that we can see quickly how we can up and running with a development environment.

Step 1: Up and Running

We’re going to do something a bit different this round and start of with a demonstration that you’re going to participate in.

- Open terminal/prompt

- Remove all Images and Containers:

docker rm $(docker ps -a -q)

docker rmi $(docker images -q)

- Navigate to the root of the repository you pulled from Github labeled “docker4devs-sharing-en”

- Run the command

docker-compose up

So within minutes we went from nothing but some source code to a modularized development application environment consisting of a reverse proxy, database and application. We didn’t need to install MongoDB or Nginx locally, manually install the necessary NPM packages and run our node application. This all happened for you.

What did we do?

- We removed all images and containers just to show how we have nothing to begin with.

- Ran Docker Compose UP

- Docker Compose pulled all missing and dependent images

- and built referenced images

- and generated and ran specified containers and network.

- Finally, starting the entire development application environment consisting of Nginx, Node and MongoDB.

So how did we do it?

Step 2: Docker Hub

The best part is that despite the significance of what we achieved in the prior step, there isn’t much that has changed since the last tutorial. Matter of fact, I simply only changed the name of the images we were originally building and made them available through Docker’s Hub site for hosting images and Docker handled the rest.

Step 2a: Create Docker Hub Account

We saw in most of the tutorials that at least some level of an image was FROM a base image. Most of the time that image not one we generated such as the mhart/alpine-node image we pulled for our production node image, or the Mongo and Nginx base images that our docker-compose services used. These images all come from Docker Hub. So the first thing we need to do is create an account so we can host our images there as well.

- Go to https://hub.docker.com

- Create an account

- Take note of your “username” (can be found under “My Profile”).

Step 3: Prepare Docker Compose and Dockerfile Files

Now that we have a Docker Hub account, we are ready to prepare our images so that they can be hosted in Docker Hub and available to any Dockerfile or docker-compose.yml that requires them.

Step 3a: Update Dockerfiles and Docker Compose File

docker-compose.yml

- Open docker-compose.yml in the root directory of the project

- Rename the image provided for the “image” option for both the nginx and node services (but not mongo) as shown:

services: nginx: container_name: nginx image: <your dockerhub username>/hh-nginx-dev-i //removed for brevity node: container_name: hackershall-dev-app image: <your dockerhub username>/hackershall-dev-i //removed for brevity

node.dev.dockerfile

- open node.dev.dockerfile in the .docker directory

- Update the name of the image listed for the “FROM” as shown

Step 4: Create Images

Now, we are ready to create the images we want to store in Dockerhub that will allow us to do exactly what we did in the first step.

Step 4a: Build Node Production Image and Mongo, Nginx Node Development Images

We’re going to individually build the images we want hosted in Docker Hub. However, in the Bonus area at the end of this tutorial, I’ll show an easier way of doing this.

- Run the following commands to create each of the dependent Node.js (prod and dev), mongoDB and Nginx images

Node:

docker build -t <your-hub-account-username>/hackershall-prod-i -f ./.docker/node.prod.dockerfile .

dockerbuild -t <your-hub-account-username>/hackershall-dev-i -f ./.docker/node.dev.dockerfile .

Nginx

docker build -t <your-hub-account-username>/hh-nginx-dev-i -f ./.docker/nginx.dev.dockerfile .

Mongo

docker build -t <your-hub-account-username>/hh-mongo-dev-i -f ./.docker/mongo.dev.dockerfile .

Step 5: Push Images to Docker Hub

With our images built locally, we’ll now push them to Docker Hub and make them available to Docker and Docker Compose.

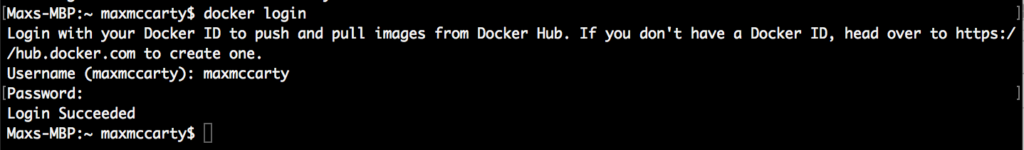

Step 5a: Log into Docker Hub

- At terminal/prompt type

docker login

- Provide username (not email) and password that you created in Step 2a.

Step 5b: Push images to Docker Hub

- Push the node.js production and dev images:

docker push <your-acct-username>/hackershall-prod-i

docker push <your-acct-username>/hackershall-dev-i

- Push Mongo and Nginx Images

docker push <your-acct-username>/hh-mongo-dev-i

docker push <your-acct-username>/hh-nginx-dev-i

Step 6: Putting It All Together

We’ve completed the steps for making the images available to Docker and allowing someone using Docker to easily pull, build and run an entire development application environment.

But How?

- We built the images that “docker-compose.yml” requires individually and

- pushed them to Docker Hub.

- When docker-compose up is ran, Docker will look for the specified images in the “docker-compose.yml” file locally first then in Docker Hub.

- Just as we saw ourselves in Step 1 – if we provided our repository to someone who has Docker installed, they too could easily run docker-compose up and have our development application environment up and running quickly.

Bonus

Remember how I mentioned in Step 4a: when we built the images individually before pushing them to Docker hub that we can do it a bit easier? We’ll part of the changes I made was removing the “build” option from each of the “docker-compose.yml” services.

Instead of building them each, would could have easily add the build option to each of the “docker-compose.yml” services and had Docker Compose build all of them like so:

Update docker-compose.yml

- Add the following highlighted “build” options under the “services” section of the docker-compose.yml file

services: nginx: container_name: nginx image: maxmccarty/hh-nginx-dev-i build: context: . dockerfile: ./.docker/nginx.dev.dockerfile //removed for brevity node: container_name: hackershall-dev-app image: maxmccarty/hackershall-dev-i build: context: . dockerfile: ./.docker/node.dev.dockerfile //removed for brevity mongo: container_name: hackershall-dev-mongodb image: maxmccarty/hh-mongo-dev-i build: context: . dockerfile: ./.docker/mongo.dev.dockerfile //removed for brevity

Build Images Using Docker Compose

- You still would build the node.js production image separately, the first step of Step 4a. However, instead of building all the development images for Mongo, Node.js and Nginx you would only have to run the following:

docker-compose build

Now you will find that the other 3 images have been built for you with one command.

Conclusion

As you delve into using Docker, you’re going to realize there is so much more to Docker than we could cover in any reasonable amount of time. The various other tool commands, dockerfile and docker-compose options and commands, clustering through Docker Swarm and cloud hosting using Docker Cloud, not to mention Docker container hosting support by the other premier cloud providers.

But my goals were to show you the possibility for using Docker to fast-track development on boarding for you or your team and remove the need to install and configure environment dependencies on your host computer. I think you have had a chance to really see the potential and power that Docker can provide for software developers like yourself.

Finally, you’ll find that many of the options and configurations could have been approached or setup a number of ways outside of how we did it in this series. Depending on your needs, your application, debugging and development scenarios, you might find different configurations work better. Don’t hesitate to see what are other options Docker provides for skinning the same *cat.

*No cats harmed during the production of this tutorial.